In today’s enterprise landscape, agility and reliability go hand-in-hand. As organizations modernize legacy infrastructure and scale operations across borders, the challenge is no longer just about moving fast – it’s about moving smart. That’s where the combination of Redgate’s powerful database DevOps tools and Microsoft Azure’s cloud-native ecosystem shines brightest.

At the intersection of robust tooling and scalable infrastructure, building a framework that supports high-volume conversions, minimizes risk, and empowers continuous delivery across database environments; the addition of Redgate’s Flyway has strengthened the ability to manage schema changes through versioned, migration-centric workflows.

Let’s unpack what this looks like behind the scenes.

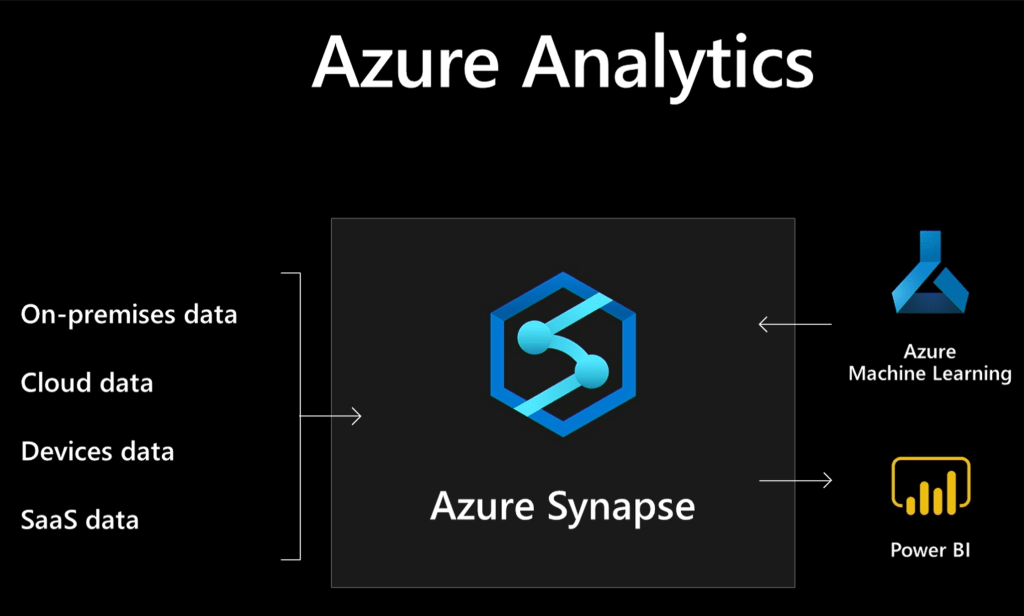

Core Architecture: Tools That Talk to Each Other

- Flyway Enterprise and Redgate Test Data Manager: Flyway Enterprise supports build and release orchestration, lightweight schema versioning and traceability, while giving rollback confidence, and Test Data Manager supports privacy compliance..

- Azure SQL + Azure DevOps: Targeting cloud-managed SQL environments while using Azure DevOps for CI/CD pipeline orchestration and role-based access controls.

- Azure Key Vault: Centralized secrets management, allowing secure credential handling across stages.

The architecture aligns development and ops teams under a unified release process while keeping visibility and auditability at every stage.

Versioned Migrations with Flyway

Flyway introduces a migration-first mindset, treating schema changes as a controlled, versioned history. It’s especially valuable during conversions, where precision and rollback capability are paramount.

A typical Flyway migration script looks like this:

— V3__add_conversion_log_table.sql CREATE TABLE conversion log ( id INT IDENTITY(1,1) PRIMARY KEY, batch_id VARCHAR(50), status VARCHAR(20), created_on DATETIME DEFAULT GETDATE() );

This is tracked by Flyway’s metadata table (flyway_schema_history), allowing us to confirm applied migrations, detect drift, and apply changes across environments consistently.

CI/CD Pipelines: From Code to Cloud

With the use Azure DevOps to orchestrate full database build and deployment cycles. Each commit triggers Flyway Enterprise and Redgate Test Data Manager stages that:

- Confirm schema changes.

- Package migration scripts.

- Mask sensitive data before test deployment.

- Deploy to staging or production environments based on approved gates.

steps: – task: Flyway@2 inputs: flywayCommand: ‘migrate’ workingDirectory: ‘$(Build.SourcesDirectory)/sql’ flywayConfigurationFile: ‘flyway.conf’

This integration allows engineers to treat their database as code – reliable, scalable, and versioned – without losing the nuance that data systems demand.

Compliance, Transparency & Trust

Redgate tools also ensure that conversion efforts meet enterprise-grade audit and compliance standards:

- Drift Detection & Undo Scripts via Flyway Enterprise for rollback precision.

- Immutable Audit Trails captured during each migration and deployment step.

- Masked Test Data with Redgate Data Masker ensures sensitive info is protected during QA stages.

Performance Gains & Operational Impact

Implementing this strategy, I’ve seen:

- Deployment velocity increase 3x.

- Conversion accuracy improves with automated validation steps.

- Team alignment improves with shared pipelines, version history, and documentation.

Most importantly, database deployment is no longer a bottleneck – it’s a competitive advantage.

Getting Back to the Basics

While the tools are powerful, the continued focus stays on strengthening foundational discipline:

- Improve documentation of schema logic and business rules.

- Standardize naming conventions and change control processes.

- Foster cultural alignment across Dev, Ops, Data, and Architecture teams.

Database DevOps is both practice and a mindset. The tools unlock automation, but the people and processes bring it to life.

Final Takeaway

Redgate + Azure, now powered by Flyway, isn’t just a tech stack; it’s a strategic framework for high-impact delivery. It lets you treat database changes with the same agility and discipline as application code, empowering teams to work faster, safer, and more collaboratively.

For global organizations managing complex conversions, this approach provides the blueprint: automate fearlessly, confirm meticulously, and scale intelligently.